One of the more obvious ways to circumvent Device Guard deployments is by exploiting code integrity policy misconfigurations. The ability to effectively audit deployed policies requires a thorough comprehension of the XML schema used by Device Guard. This post is intended to serve as documentation of the XML elements of a Device Guard code integrity policy with a focus on auditing from the perspective of a pentester. And do note that the schema used by Microsoft is not publicly documented and subject to change in future versions. If things change, expect an update from me.

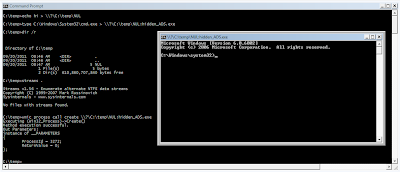

As a reminder, deployed code integrity policies are stored in %SystemRoot%\System32\CodeIntegrity\SIPolicy.p7b in binary form. If you're lucky enough to track down the original XML code integrity policy, you can validate that it matches the deployed SIPolicy.p7b by converting it to binary form with ConvertFrom-CIPolicy and then comparing the hashes with Get-FileHash. If you are unable to locate the original XML policy, you can recover an XML policy with the ConvertTo-CIPolicy function I wrote. Note, however that ConvertTo-CIPolicy cannot recover all element ID and FriendlyName attributes as the process of converting to binary form is a lossy process, unfortunately.

For reference, here are some code integrity policies that I personally use. Obviously, yours will be different in your environment.

- https://gist.github.com/mattifestation/e3c8958d27c2f73b80f0132fc1ce2abd#file-finalpolicy-xml

- https://github.com/mattifestation/DeviceGuardBypassMitigationRules/blob/master/BypassDenyPolicy.xml

Policies are generated initially using the New-CiPolicy cmdlet.

The current (but subject to change) code integrity schema can be found here. This was pulled out from an embedded resource in the ConfigCI cmdlets - Microsoft.ConfigCI.Commands.dll.

What will follow is a detailed breakdown of most code integrity policy XML elements that you may encounter while auditing Device Guard deployments. Hopefully, at some point in the future, Microsoft will provide such documentation. In the mean time, I hope this is helpful! In a future post, I will conduct an actual code integrity policy audit and identify potential vulnerabilities that would allow for unsigned code execution.

VersionEx

Default value: 10.0.0.0

Purpose: An admin can set this to perform versioning of updated CI policies. This is what I do in BypassDenyPolicy.xml. VersionEx can be set programmatically with Set-CIPolicyVersion.

PolicyTypeID

Default value: {A244370E-44C9-4C06-B551-F6016E563076}

Purpose: Unknown. This value is automatically generated upon calling New-CIPolicy. Unless Microsoft decides to change things, this value should always remain the same.

PlatformID

Default value: {2E07F7E4-194C-4D20-B7C9-6F44A6C5A234}

Purpose: Unknown. This value is automatically generated upon calling New-CIPolicy. Unless Microsoft decides to change things, this value should always remain the same.

Rules

The Rules element consist of multiple child Rule elements. A Rule element refers to a specific policy rule option - i.e. a specific configuration of Device Guard. Some, but not all of these options are documented. Policy rule options are configured with the Set-RuleOption cmdlet.

Documented and/or publicly exposed policy rules

1) Enabled:UMCI

Description: Enforces user-mode code integrity for user mode binaries, PowerShell scripts, WSH scripts, and MSIs. The absence of this policy rule implies that whitelist/blacklist rules will only apply to drivers.

Operational impact: User mode binaries and MSIs not explicitly whitelisted will not execute. PowerShell will be placed into ConstrainedLanguage mode. Whitelisted, signed scripts have no restrictions and run in FullLanguage mode. WSH scripts (VBScript and JScript) not whitelisted per policy are unable to instantiate COM/ActiveX objects. Signed scripts whitelisted by policy have no such restrictions.

2) Required:WHQL

Description: Drivers must be Windows Hardware Quality Labs (WHQL) signed. Drivers signed with a WHQL certificate are indicated by a "Windows Hardware Driver Verification" EKU (1.3.6.1.4.1.311.10.3.5) in their certificate.

Operational impact: This will raise the bar on the quality (and arguably the trustworthiness) of the drivers that will be allowed to execute.

3) Disabled:Flight Signing

Description: Disable loading of flight signed code. These are used most commonly with Insider Preview builds of Windows. A flight signed binary/script is one that is signed by a Microsoft certificate and has the "Preview Build Signing" EKU (1.3.6.1.4.1.311.10.3.27) applied. Thanks to Alex Ionescu for confirming this.

Operational Impact: Preview build binaries/scripts will not be allowed to load. In other words, if you're on a WIP build, don't expect your OS to function properly.

4) Enabled:Unsigned System Integrity Policy

Description: If present, the code integrity policy does not have to be signed with a code signing certificate. The absence of this rule option indicates that the code integrity policy must be signed by a whitelisted signer as indicated in the UpdatePolicySigners section below.

Operational Impact: Once signed, deployed code integrity options can only be updated by signing a new policy with a whitelisted certificate. Even an admin cannot remove deployed, signed code integrity policies. If modifying and redeploying a signed code integrity policy is your goal, you will need to steal one of the whitelisted UpdatePolicySigners code signing certificates.

5) Required:EV Signers

Description: All drivers must be EV (extended validation) signed.

Operational Impact: This will likely not be present as most 3rd party and OEM drivers are not EV signed. Supposedly, Microsoft is mandating that all drivers be EV signed starting with Windows 10 Anniversary Update. From my observation, this does not appear to be the case.

6) Enabled:Advanced Boot Options Menu

Description: By default, with a code integrity policy deployed, the advanced boot options menu is disabled.

Operational Impact: With this option present, the menu is available to someone with physical access. There are additional concerns associated with physical access to a Device Guard enabled system. Such concerns may be covered in a future blog post.

7) Enabled:Boot Audit On Failure

Description: If a driver fails to load during the boot process due to an overly restrictive code integrity policy, the system will be placed into audit mode for that session.

Operational Impact: If you could somehow get a driver to fail to load during the boot process, Device Guard would cease to be enforced.

8) Disabled:Script Enforcement

Description: This is not actually documented but listed with 'Set-RuleOption -Help'. You would think that this actually does what it says but in practice it doesn't. Even with this set, PowerShell and WSH remain locked down.

Operational Impact: None. It is unlikely that you would see this in production anyway.

Undocumented and/or not not publicly exposed policy rules

The following policy rule options are undocumented and it is unclear if they are supported or not. As of this writing, you will likely never see these options in a deployed policy.

- Enabled:Boot Menu Protection

- Enabled:Inherit Default Policy

- Allowed:Prerelease Signers

- Allowed:Kits Signers

- Allowed:Debug Policy Augmented

- Allowed:UMCI Debug Options

- Enabled:UMCI Cache Data Volumes

- Allowed:SeQuerySigningPolicy Extension

- Enabled:Filter Edited Boot Options

- Disabled:UMCI USN 0 Protection

- Disabled:Winload Debugging Mode Menu

- Enabled:Strong Crypto For Code Integrity

- Allowed:Non-Microsoft UEFI Applications For BitLocker

- Enabled:Always Use Policy

- Enabled:UMCI Trust USN 0

- Disabled:UMCI Debug Options TCB Lowering

- Enabled:Inherit Default Policy

- Enabled:Secure Setting Policy

EKUs

This can consist of a list of Extended/Enhanced Key usages that can be applied to signers. When applied to a signer rule, the EKU in the certificate must be present in the certificate used to sign the binary/script.

EKU instances have a "Value" attribute consisting of an encoded OID. For example, if you want to enforce WHQL signing, the "Windows Hardware Driver Verification" EKU (1.3.6.1.4.1.311.10.3.5) would need to be applied to those drivers. When encoded the "Value" attribute would be "010A2B0601040182370A0305" (where the first byte which would normally be 0x06 (absolute OID) is replaced with 0x01). The OID encoding process is described here. ConvertTo-CIPolicy decodes and resolves the original FriendlyName attribute for encoded OID values.

FileRules

These are rules specific to individual files based either on its hash or based on its filename (not on disk but from the embedded PE resource) and file version (again, from the embedded PE resource). FileRules can consist of the following types: FileAttrib, Allow, Deny. File rules can apply to specific signers or signing scenarios.

FileAttrib

These are used to reflect a user or kernel PE filename and minimum file version number. These can be used to either explicitly allow or block binaries based on filename and version.

Allow

These typically consist of just a file hash and is used to override an explicit deny rule. In practice, it is unlikely that you will see an Allow file rule.

Deny

These typically consist of just a file hash and are used to override whitelist rules when you want to block trusted code by hash.

Signers

This section consists of all of the signing certificates that will be applied to rules in the signing scenario section. Each signer entry is required to have a CertRoot property where the Value attribute refers to the hash of the cbData blob of the certificate. The hashing algorithm used is dependent upon the hashing algorithm specified in the certificate. This hash serves as the unique identifier for the certificate. The CertRoot "Type" attribute will almost always be "TBS" (to be signed). The "WellKnown" type is also possible but will not be common.

The signer element can have any of the following optional child elements:

CertEKU

One or more EKUs from the EKU element described above can be applied here. Ultimately, this would constrain a whitelist rule to code signed with certificates with specific EKUs, "Windows Hardware Driver Verification" (WHQL) probably being the most common.

CertIssuer

I have personally not seen this in practice but this will likely contain the common name (CN) of the issuing certificate.

CertPublisher

This refers to the common name (CN) of the certificate. This element is associated with the "Publisher" file rule level.

CertOemID

This is often associated with driver signers. This will often have a third party vendor name associated with a driver signed with a "Microsoft Windows Third Party Component CA" certificate. If CertOemIDs were not specified for the "Microsoft Windows Third Party Component CA" signer, then you would implicitly be whitelisting all 3rd party drivers signed by Microsoft.

FileAttribRef

There may be one or more references to FileAttrib rules where the signer rules apply only to the files referenced.

SigningScenarios

When auditing Code Integrity policies, this is where you will want to start your audit and then work backwards. It contains all the enforcement rules for drivers and user mode code. Signing scenarios consist of a combination of the individual elements discussed previously. There will almost always be two Signing scenario elements present:

- <SigningScenario Value="131" ID="ID_SIGNINGSCENARIO_DRIVERS_1"> - This scenario will consist of zero or more rules related to driver loading.

- <SigningScenario Value="12" ID="ID_SIGNINGSCENARIO_WINDOWS"> - This scenario will consist of zero or more rules related to user mode binaries, scripts, and MSIs.

Each signing scenario can have up to three subelements:

- ProductSigners - This will comprise all of the code integrity rules for drivers or user mode code depending upon the signing scenario.

- TestSigners - You will likely never encounter this. The purpose of this signing scenario is unclear.

- TestSigningSigners - You will likely never encounter this. The purpose of this signing scenario is unclear.

Each signers group (ProductSigners, TestSigners, or TestSigningSigners) may consist of any of the following subelements:

Allowed signers

These are the whitelisted signer rules. These will consist of one or more signer rules and optionally, one or more ExceptDenyRules which link to specific file rules making the signer rule conditional. In practice, ExceptDenyRules will likely not be present.

Denied signers

These are the blacklisted signer rules. These rules will always take priority over allow rules. These will consist of one or more signer rules and optionally, one or more ExceptAllowRules which link to specific file rules making the signer rule conditional. In practice, ExceptAllowRules will likely not be present.

FileRulesRef

These will consist of individual file allow or deny rules. For example, if there are individual files to be blocked by hash, such rules will be included here.

UpdatePolicySigners

If policy signing is required as indicated by the absence of the "Enabled:Unsigned System Integrity Policy" policy rule option, a deployed policy must be signed by the signers indicated here. The only way to modify a deployed policy in this case would be to resign the policy with one of these certificates. UpdatePolicySigners is updated using the Add-SignerRule cmdlet.

If a binary policy (SIPolicy.p7b) is signed, you can validate signature with Get-CIBinaryPolicyCertificate.

CISigners

These will consist of mirrored signing rules from the ID_SIGNINGSCENARIO_WINDOWS signing scenario. These are related to the trusting of signers and signing levels by the kernel. These are auto-generated and not configurable via the ConfigCI PowerShell module. These entries should not be modified.

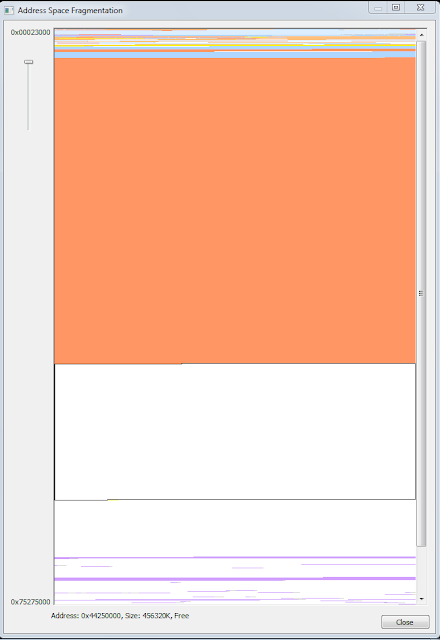

HvciOptions

This specifies the configured hypervisor code integrity (HVCI) option. HVCI implements several kernel exploitation mitigations including W^X kernel memory and restricts the ability to allocate any executable memory for code that isn't explicitly whitelisted. Basically, HVCI allows for the system to continue to enforce code integrity even if the kernel is compromised. HVCI settings are configured using the Set-HVCIOptions cmdlet.

Any combination of the following values are accepted:

0 - Non configured

1 - Enabled

2 - Strict mode

4 - Debug mode

HVCI is not well documented as of this writing. Here are a few references to it:

- https://blogs.msdn.microsoft.com/windows_hardware_certification/2015/05/22/driver-compatibility-with-device-guard-in-windows-10/ - Ultimately, drivers need to conform to HVCI requirements if they are to be able to load with HVCI enabled.

- http://www.alex-ionescu.com/blackhat2015.pdf

Outside of Microsoft, Alex Ionescu and Rafal Wojtczuk are experts on this subject.

Settings

Settings may consist of one or more provider/value pairs. These options are referred to internally as "Secure Settings". It is unclear the range of possible values that can be set here. The only entry you might see would be a PolicyInfo provider setting where a user can specify an explicit Name and Id for the code integrity policy which would be reflected in Microsoft-Windows-CodeIntegrity/Operational events. PolicyInfo settings can be set with the Set-CIPolicyIdInfo cmdlet.